Exporting from the Wayback Machine

One of my little projects has been trying to resurrect a corner of web history. It seems like a normal enough story: there used to be a website, it went away, the guy who made it has no backups.

Of course, the Wayback Machine has copies of nearly everything, and this site is no exception. The content isn't gone to the sands of time – but it's also nowhere near accessible, since the individual crawls presented by the Wayback Machine are various fragments.

I want to get the data out of the Wayback Machine, process it into a cohesive form, and give it back to the creator so he can make it available on the web once again.

Getting the data out poses two problems. First, I want to know exactly what data the Wayback Machine contains. Second, I want to retrieve the items of interest.

Querying

The Wayback Machine is basically a big distributed filesystem that contains 5+ petabytes of archived content. The data is stored in WARC files, each weighing about a gigabyte.

WARCs (and ARCs before them) are just blobs containing record after record after record with no features to support random access. That's a problem, so the Wayback Machine generates secondary indexes called CDX files. These allow a reader to jump straight to a particular record without having to read (and decompress!) an entire gigabyte of crawl results.

The Internet Archive offers a CDX Server API. This allows you to phrase a query and get back a list of records that match. For example, here's the first record for google.com:

com,google)/ 19981111184551 http://google.com:80/ text/html 200 HOQ2TGPYAEQJPNUA6M4SMZ3NGQRBXDZ3 381

This tells us more than enough: the URL, timestamp, HTTP status code, path, content type, hash, and length.

In short, the CDX server allows me to get a list of archived resources with enough detail for me to decide if it's an object I need.

Retrieval

This is where it gets harder. See, the Wayback Machine is designed for use by humans, but I want to hook it into a machine.

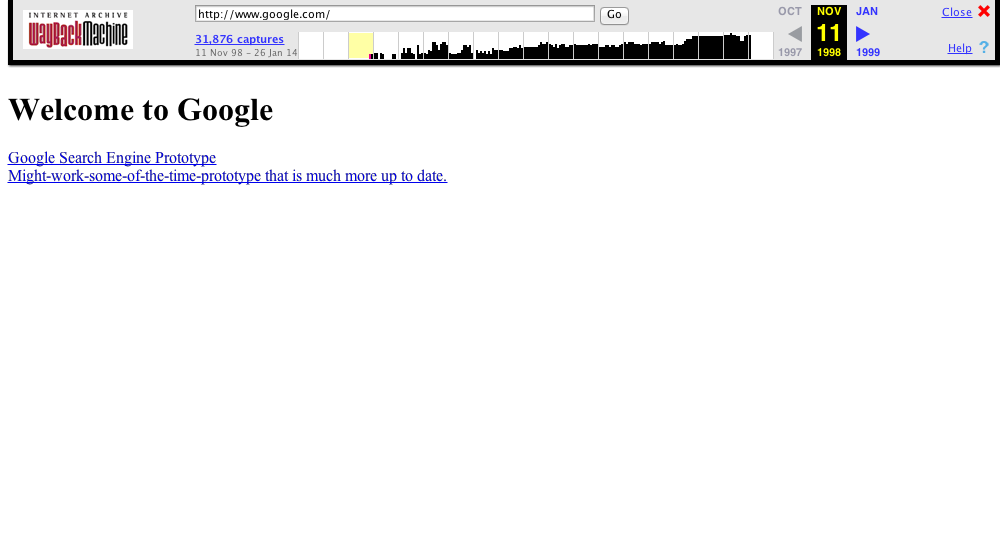

Check out the Wayback Machine for google.com, 1998-11-11:

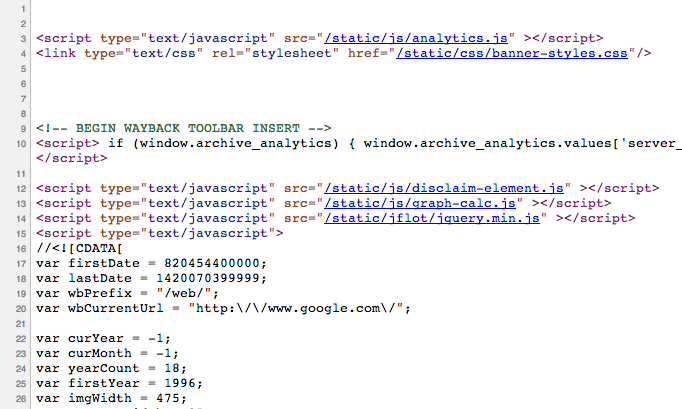

See the bar up top? View source:

Ewww. The Wayback Machine added 250 lines of not-quite-HTML. This makes it easy for humans to navigate, but it complicates machine parsing.

I dug around the Wayback machine source code and discovered a solution. The /web/YYYYMMDDHHMMSS/<url> syntax accepts flags after the timestamp. This is used to trigger certain filters from various contexts: <link rel="stylesheet"> links are rewritten to trigger CSS-specific processing, <img> links are rewritten to trigger image-specific processing, <script> does Javascript, etc.

I discovered that this mechanism supports an id flag. This flag ultimately causes the resource to be handled by TransparentReplayRenderer, which attempts to hand back the content in an unmodified form.

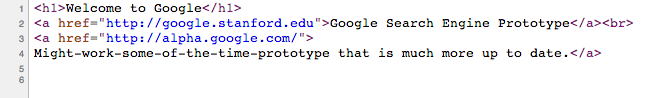

View source on /web/19981111184551id_/http://www.google.com/:

Bingo!

I'd prefer a way to get the corresponding WARC record for maximum fidelity, but that's not available right now. The headers aren't quite preserved – some new ones get added and I think some of the originals get clobbered in various situations, but it's better than the alternatives. At least the HTTP response body isn't completely rewritten.

(There's another way to trigger this behavior too, but I'm not sure it's intentional and it might have security implications.)

Now, the question is: does archive.org intend for me to connect these two services? I'm guessing no – the terms of service grant access "for scholarship and research purposes only", and their robots.txt forbids machine access. Still, the existence of the CDX server indicates they support certain types of machine interaction, so I wrote them an email to ask. I'll keep you posted.